Emails pile up fast. Sorting them manually wastes time and energy. Training an AI email sorter can automate this, flagging spam, prioritizing important messages, and organizing promotions. This guide walks you through building one from scratch using NLP and machine learning. You’ll learn to prepare data, train a model, and test it. No advanced coding experience is required, just curiosity and a willingness to learn. Let’s dive in!

What is Train an AI Email Sorter?

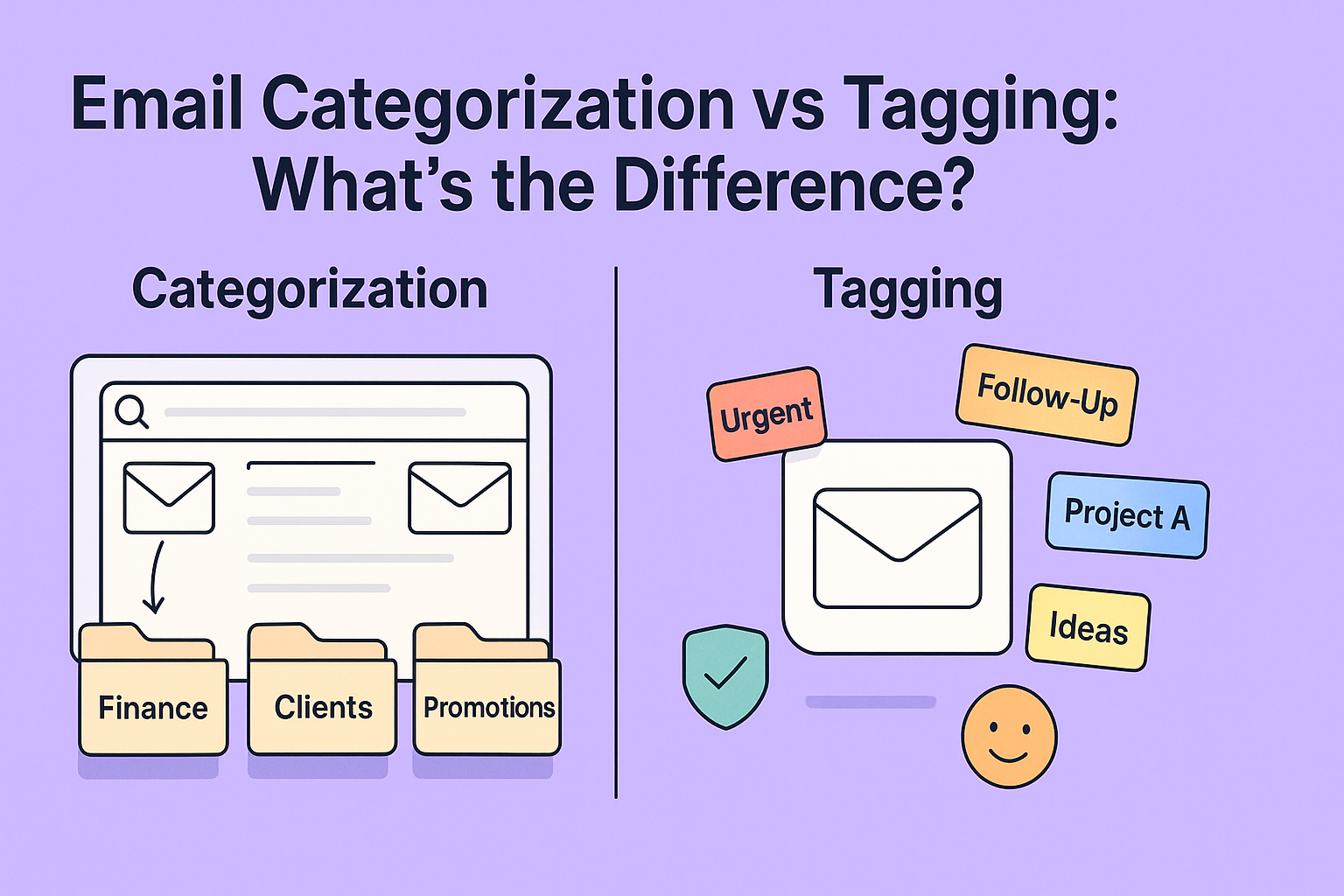

A trained AI email sorter uses algorithms to classify emails as spam, primary, or promotions. It’s smarter than basic spam filters, which rely on simple rules. An intelligent sorter learns patterns in email content, such as keywords or sender behavior, to make informed decisions.

Key Differences from Spam Filters

- Spam filters: Block obvious junk mail using fixed rules (e.g., “free viagra” triggers a block).

- AI sorters: Learn from data to categorize emails, even subtle ones, like separating newsletters from urgent work emails.

Use Cases

- Productivity: Prioritize emails from your boss or clients.

- Business: Route customer inquiries to the right team.

- Personal: Keep your inbox clean by sorting subscriptions.

Step 1: Preparing Your Email Dataset

To train an AI email sorter, you need data, lots of emails labeled as spam, primary, or promotions. Think of it like teaching a child to sort fruit by showing them apples and oranges.

Where to Find Datasets

- Enron Email Dataset: A public collection of real corporate emails (available on Kaggle).

- Apache SpamAssassin: A dataset of spam and non-spam emails, perfect for beginners.

- Your Own Inbox: Export emails (with caution) for a custom dataset, but ensure privacy compliance.

Cleaning and Preprocessing

Raw emails are messy. They contain HTML, signatures, and typos. Cleaning involves:

- Removing HTML tags and metadata.

- Extracting the email body and subject.

- Standardizing text (e.g., lowercase everything).

Labeling Emails

Each email needs a label: “spam,” “primary,” or “promotions.” For example:

- Spam: “Win $1,000 now!”

- Primary: “Meeting tomorrow at 10 AM.”

- Promotions: “50% off your next purchase!”

If using a public dataset, labels are often included. For personal emails, you’ll need to label them manually or semi-automatically.

Step 2: Text Preprocessing with NLP

Emails are text, but computers need numbers. Natural Language Processing (NLP) turns words into data a machine can understand. Imagine translating a book into a code only your AI can read.

Key NLP Techniques

- Tokenization: Split text into words (e.g., “Meeting tomorrow” → [“meeting,” “tomorrow”]).

- Removing Stop Words: Drop common words like “the” or “is” that add little meaning.

- Lemmatization: Reduce words to their root (e.g., “running” → “run”).

Vectorization

Words must become numbers. Here’s how:

- Bag of Words: Counts word frequency (e.g., “buy now” appears twice → [2, 0, 1]).

- TF-IDF: Weighs important words higher (e.g., “urgent” matters more than “the”).

- Word2Vec: Captures word meaning (e.g., “meeting” is similar to “appointment”).

For advanced users, BERT or transformers can analyze context, but they’re complex and optional for beginners.

Example

Raw email: “Buy cheap watches now!”

After preprocessing: [“buy,” “cheap,” “watch”]

After vectorization: [0.7, 0.3, 0.9] (simplified numbers).

Step 3: Choosing the Right ML Model

Your AI needs a brain, a machine learning model, to classify emails. Think of it as choosing a chef for your kitchen. Some are simple but reliable; others are fancy but harder to manage.

Simple Models

- Naive Bayes: Fast and great for text. Assumes words are independent (e.g., “cheap” often means spam).

- Logistic Regression: Predicts categories with high accuracy for basic tasks.

Advanced Models

- LSTM (Deep Learning): Understands word order (e.g., “not urgent” vs. “urgent”).

- Transformers: Cutting-edge but slow and resource-heavy.

Pros and Cons

| Model | Accuracy | Training Time | Complexity |

| Naive Bayes | Good | Fast | Low |

| Logistic Regression | Great | Moderate | Low |

| LSTM/Transformers | Excellent | Slow | High |

For beginners, start with Naive Bayes or Logistic Regression. They’re accurate and easy to implement.

Step 4: Training Your Classifier

Now, let’s teach your model to sort emails. It’s like training a dog: show it examples, reward correct guesses, and test its skills.

Splitting Data

Divide your dataset:

- Training Set (70%): Used to teach the model.

- Test Set (30%): Used to check its performance.

Training Process

- Feed the model preprocessed emails and their labels.

- The model learns patterns (e.g., “urgent” often means primary).

- Adjust settings (hyperparameters) to improve accuracy.

Here’s a Python snippet using Naive Bayes:

from sklearn.naive_bayes import MultinomialNB

from sklearn.feature_extraction.text import TfidfVectorizer

# Sample data

emails = [“Buy now!”, “Meeting at 10”, “50% off sale”]

labels = [“spam”, “primary”, “promotions”]

# Vectorize text

vectorizer = TfidfVectorizer()

X = vectorizer.fit_transform(emails)

# Train model

model = MultinomialNB()

model.fit(X, labels)

Evaluation Metrics

Check how well your model performs:

- Accuracy: Percentage of correct predictions.

- Precision: How many “spam” predictions were actually spam?

- Recall: How many real spam emails were caught?

Aim for high precision to avoid mislabeling important emails as spam.

Step 5: Testing & Improving Your AI Email Sorter

Your model is trained, but is it good? Test it with new emails and tweak it for better results.

Identifying Errors

- False Positives: Important emails marked as spam.

- False Negatives: Spam emails slipping into your inbox.

Example: If “Meeting reminder” is labeled spam, check if words like “reminder” confused the model.

Fine-Tuning

- Add more data to improve accuracy.

- Adjust model parameters (e.g., tweak TF-IDF settings).

- Try a different model if accuracy is low.

Ongoing Training

Emails evolve. Spammers get sneakier. Retrain your model periodically with new data to keep it sharp.

Challenges You Might Face

Building an AI email sorter isn’t always smooth. Here are common hurdles:

Small or Imbalanced Datasets

- Issue: Too few emails or mostly spam in your dataset.

- Solution: Use public datasets or balance classes (e.g., equal spam and primary emails).

Overfitting or Underfitting

- Overfitting: Model memorizes data but fails on new emails.

- Underfitting: Models are too simple to learn patterns.

- Solution: Adjust model complexity or add regularization.

Spam Evasion Tactics

Spammers use tricks like misspelling (“v1agra”). Advanced NLP (e.g., Word2Vec) can help catch these.

Add-on: How to Deploy It (Optional)

Want your sorter to work with your inbox? Here’s how to take it live.

Local Script vs. Gmail API

- Local Script: Run your model on your computer to sort exported emails.

- Gmail API: Connect your model to Gmail for real-time sorting (requires OAuth setup).

Automation Tools

- Use cloud platforms like AWS or Google Cloud for 24/7 sorting.

- Schedule retraining with tools like Airflow.

Privacy and Security

- Never store sensitive emails on public servers.

- Use encryption for data transfers.

- Comply with GDPR or CCPA if handling personal data.

Conclusion

You’ve learned to build an email classifier from scratch! You prepared a dataset, preprocessed text with NLP, chose a model, trained it, and tested it. Even a simple AI email sorter can save hours each week. Experiment with different models and datasets. Keep tweaking, and your inbox will thank you!